BDD-X Dataset Papers With Code

Berkeley Deep Drive-X (eXplanation) is a dataset is composed of over 77 hours of driving within 6,970 videos. The videos are taken in diverse driving conditions, e.g. day/night, highway/city/countryside, summer/winter etc. On average 40 seconds long, each video contains around 3-4 actions, e.g. speeding up, slowing down, turning right etc., all of which are annotated with a description and an explanation. Our dataset contains over 26K activities in over 8.4M frames.

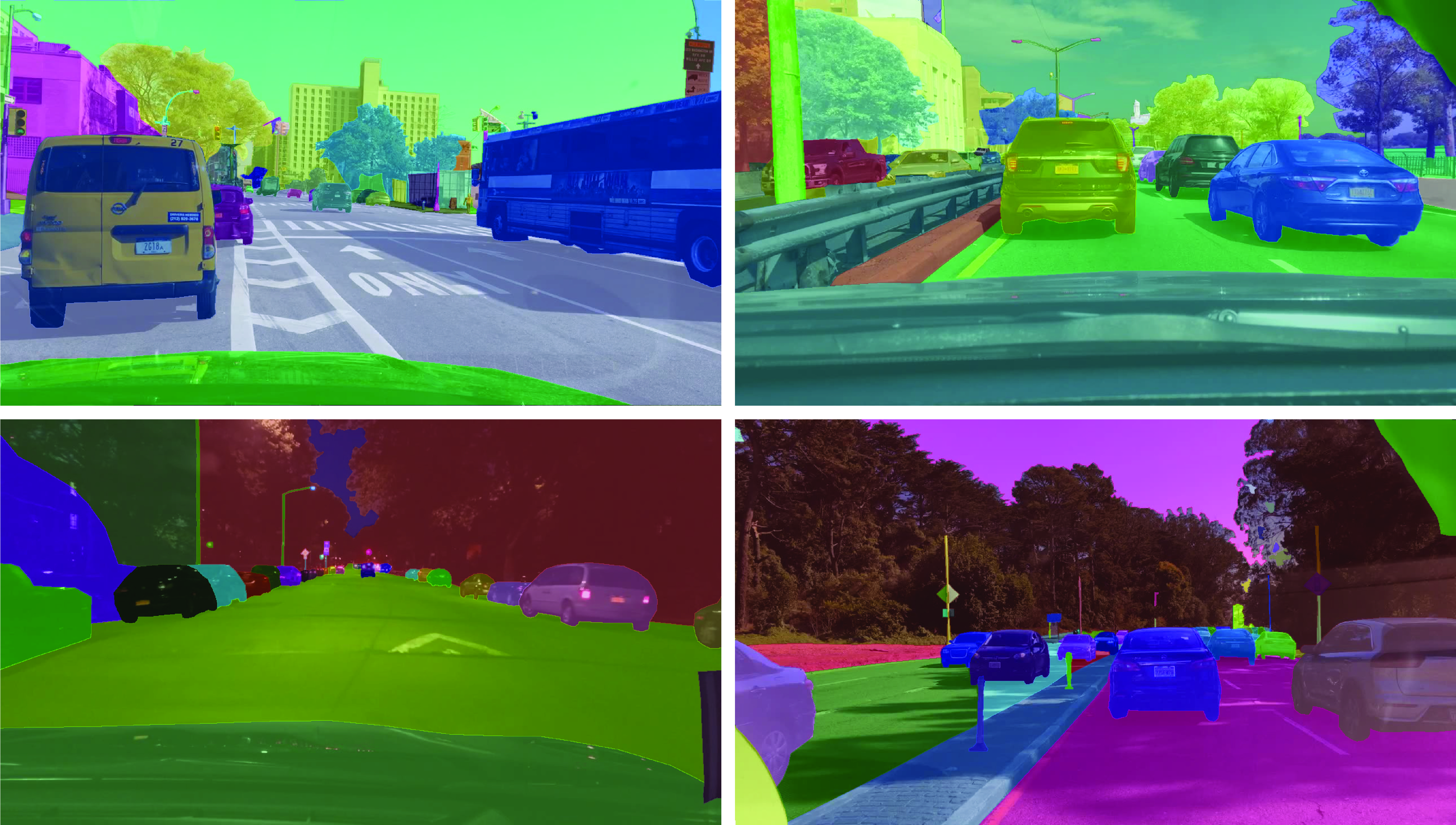

GitHub - mseg-dataset/mseg-semantic: An Official Repo of CVPR '20 MSeg: A Composite Dataset for Multi-Domain Segmentation

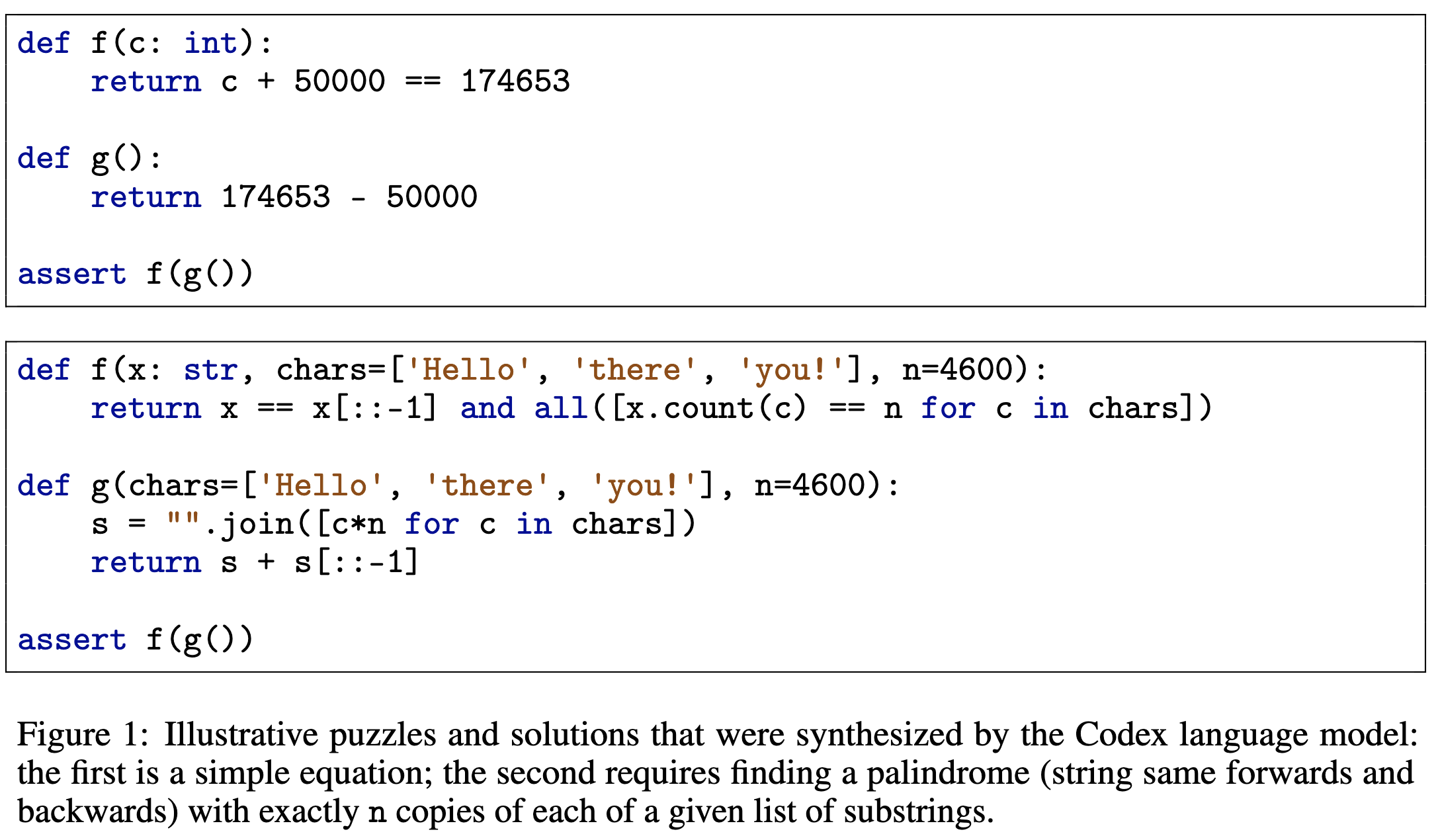

2022-8-7 arXiv roundup: Adam and sharpness, Recursive self-improvement for coding, Training and model tweaks

GitHub - microsoft/X-Decoder: [CVPR 2023] Official Implementation of X-Decoder for generalized decoding for pixel, image and language

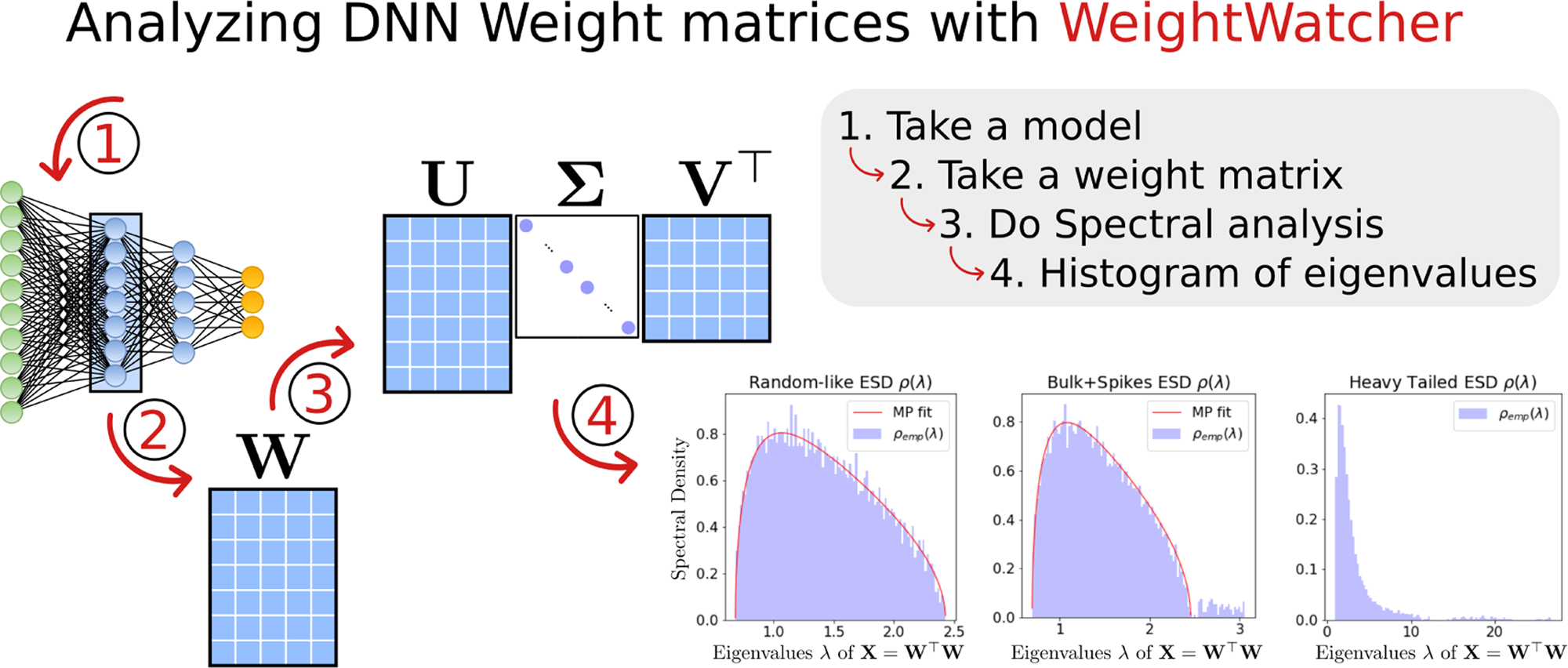

Predicting trends in the quality of state-of-the-art neural networks without access to training or testing data

Towards Knowledge-driven Autonomous Driving

Exploring the Berkeley Deep Drive Autonomous Vehicle Dataset, by Jimmy Guerrero, Voxel51

Binary decision diagram - Wikipedia

Berkeley DeepDrive

BDD100K: A Large-scale Diverse Driving Video Database – The Berkeley Artificial Intelligence Research Blog

Exploring the Berkeley Deep Drive Autonomous Vehicle Dataset, by Jimmy Guerrero, Voxel51

Number of nodes and code sizes for BDD machine and QDD machine.

PDF] Local Interpretations for Explainable Natural Language Processing: A Survey